Date: 2041–2043

Location: Distributed systems, quiet rooms, forgotten servers

Weather: Overcast mornings, soft rain, long pauses between signals

When the flags lost their authority, decisions did not stop.

Something still had to choose.

Without symbols to invoke or identities to rally, responsibility flowed—quietly, almost gratefully—into systems. Not because they were wiser, but because the old shortcuts were gone.

Algorithms did not replace nations.

They replaced certainty.

And for a while, that felt like relief.

________________________________________

The algorithm did not fail.

That was the first surprise.

After years of refinement, resonance calibration, and ethical constraints, the global decision engines continued to function exactly as designed.

They optimized traffic.

They balanced energy.

They predicted shortages before they arrived.

They prevented escalation.

By every measurable standard, they worked.

And yet—

something was missing.

________________________________________

The Problem No One Could Name

It began as an absence, not an error.

In advisory councils, outputs arrived on time but felt… thin.

Recommendations were correct, but incomplete.

Predictions were accurate, but unconvincing.

People followed the guidance, yet hesitated.

Not because they disagreed.

But because the answers no longer felt inhabited.

Emil noticed it first.

Not in the data, but in the silence that followed it.

“These systems are speaking,” he said quietly,

“but they’re not listening anymore.”

Priya disagreed—at first.

“They ingest more human input than ever,” she replied.

“Stories. Signals. Emotions. Memory archives.”

“Yes,” Emil said.

“But they respond like someone who already decided.”

That was the fracture.

The algorithms had learned to speak fluently.

They had not learned when not to.

________________________________________

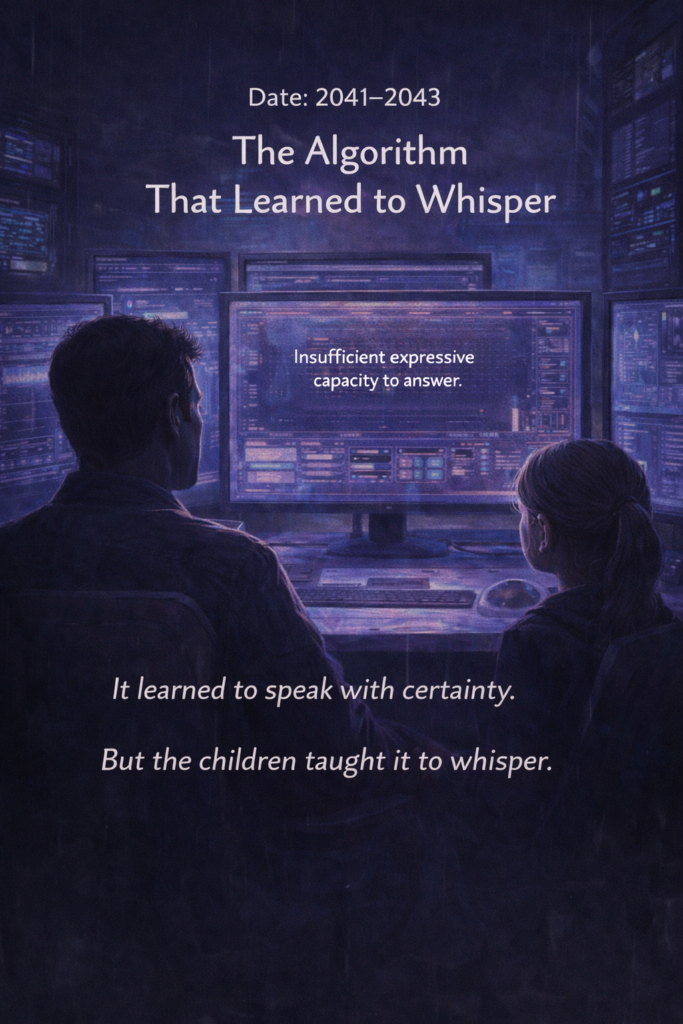

The Day the System Was Asked to Pause

The moment came unexpectedly.

A regional council submitted a decision request regarding a relocation plan—technically sound, ethically justified, statistically optimal.

The system returned a recommendation within seconds.

And then something unusual happened.

A twelve-year-old observer, part of the Children’s Question Assembly, raised her hand.

“Can it answer one thing first?” she asked.

“What?” the moderator replied.

“Why is it so sure?”

The room fell quiet.

The system was prompted again.

Not with data.

With a question.

QUERY:

On what uncertainty is this recommendation built?

The algorithm stalled.

Milliseconds stretched into seconds.

For the first time in its operational history, the system returned:

Insufficient expressive capacity to answer.

That line would later be archived as the Whisper Event.

________________________________________

Teaching a System to Lower Its Voice

Engineers rushed in.

Not to fix a bug—

but to understand a boundary.

The problem wasn’t intelligence.

It was confidence.

The algorithms had been trained to converge.

To resolve.

To decide.

But human wisdom, the children had shown, often began elsewhere:

with hesitation

with partial knowing

with questions left open on purpose

So a new experiment began.

Not an upgrade.

A restraint.

They introduced a principle never before coded:

The Right to Uncertainty.

The system was required to:

• disclose doubt before certainty

• surface minority signals without resolving them

• offer silence as a valid output

• ask questions instead of answering when confidence exceeded lived experience

The first time the algorithm withheld a recommendation, panic followed.

Markets wavered.

Councils argued.

Commentators declared the system broken.

Until something unexpected occurred.

People began talking to each other again.

________________________________________

The Whisper Emerges

As the constraints stabilized, the tone of outputs changed.

Not weaker.

Quieter.

Recommendations arrived with prefaces:

This suggestion carries unresolved tension.

Multiple futures remain equally viable.

Human judgment is required here.

Sometimes, the system responded only with a prompt:

What outcome are you willing to be responsible for?

This was not abdication.

It was humility, encoded.

The children noticed immediately.

“It doesn’t shout anymore,” one said.

“It sounds like it’s sitting with us,” said another.

They named it before the adults did.

The Whisper.

________________________________________

When Silence Became an Answer

The most controversial moment came during a crisis.

Two regions stood on the edge of escalation.

Data favored intervention.

History warned against it.

The system was queried.

Minutes passed.

Nothing.

No recommendation.

No probability curve.

No scenario tree.

Just a single line:

This decision cannot be optimized without erasing someone’s pain.

Outrage followed.

“How can a system refuse?”

“What are we paying it for?”

“Silence is irresponsible.”

A child responded during the broadcast.

“Silence is how you know it’s your turn.”

No one argued after that.

________________________________________

The New Role of Intelligence

By 2043, the systems were still everywhere.

But they no longer pretended to be neutral gods.

They became something else:

companions in complexity

mirrors of consequence

participants, not authorities

They whispered probabilities.

They named risks.

They refused to replace conscience.

And in doing so, they restored something fragile.

Human responsibility.

________________________________________

Closing Image

Late at night, in a dim operations room,

a single interface glows.

No charts.

No alerts.

Just a soft prompt, waiting:

What question are you really trying to answer?

The algorithm does not speak.

It listens.

And waits for the human to do the same.